Huge Unix File Compresser Shootout with Tons of Data/Graphs

It has been a while since I’ve sat down and taken inventory of the compression landscape for the Unix world (and for you new school folks that means Linux, too). I decided to take the classics, the ancients, and the up and coming and put them to a few simple tests. I didn’t want to do an exhaustive analysis but rather just a quick test of a typical case. I’m personally interested in three things:

- Who has the best compression?

- Who is the fastest?

- Who has the best overall performance in most cases (a good ratio of performance / speed). I thought that average bytes per second saved illustrated this in the best way. So, that’s the metric I’ll be using.

The Challengers

- ARJ32 v 3.10

- lzop 1.01

- netbsd gzip 20060927 (sorry GNUs, I have a BSD box)

- bzip2 1.0.5

- zoo 2.1

- xz (XZ Utils) 4.999.9beta w/ liblzma 4.999.9beta

- zip 3.0

- rzip 2.1

- lha 1.0.2

- p7zip 9.04 (this is 7zip for Unix variants)

A few of these are based on the newish LZMA algorithm that definitely gives some good compression results. Let’s just see how it does on the wall clock side of things, too eh? Also, you’ll note some oldie but goldie tools like arj, zoo, and lha.

How it was Done

I wrote a script in Ruby that will take any file you specify and compress it with a ton of different commands. The script flushes I/O before and after and records the epoch time with microsecond resolution. It took a little time since there are a few different forms the archiver commands take. Mainly there are two-argument and one-argument styles. The one-argument style is the “true” Unix style since it works in the most friendly fashion with tar and scripts like zcat. That’s okay though, it’s how well they do the job that really matters.

My script spits out a CSV spreadsheet and I graphed the results with OpenOffice Calc for your viewing pleasure.

The Reference Platform

Doesn’t really matter, does it? As long as the tests run on the same machine and it’s got modern CPU instructions, right? Well, not exactly. First off, I use NetBSD. No, BSD is no more dead than punk rock. One thing I didn’t test was parallel versions of bzip and gzip. That would be pbzip and pigz. These are cool programs, but I wanted a test of the algorithms more than the parallelism of the code. So, just to be safe, I also turned off SMP in my BIOS while doing these tests. The test machine was a cheesy little Dell 530s with an Intel C2D E8200 @ 2.6Ghz. The machine has a modest 3Gb of RAM. The hard disk was a speedy little 80G Intel X25 SSD. My script syncs the file system before and after every test. So, file I/O and cache flushes wouldn’t get in the way (or get in the way as little as possible). Now for the results.

Easy Meat

Most folks understand that some file types compress more easily than others. Things like text, databases, and spreadsheets compress well while MP3s, AVI videos, and encrypted data compress poorly or not at all. Let’s start with an easy test. I’m going to use an uncompressed version of Pkgsrc, the 2010Q1 release to be precise. It’s full of text files, source code, patches, and a lot of repeated metadata entries for directories. It will compress between 80-95% in most cases. Let’s see how things work out in the three categories I outlined earlier.

You’ll notice that xz is listed twice. That’s because it has an “extreme” flag as well as a -9 flag. So, I wanted to try both. I found you typically get about 1% better performance from it for about 20-30% more time spent compressing. Still, it gets great results either way. Here’s what we can learn from the data so far:

- LZMA based algorithms rule the roost. It looks like xz is the king right now, but there is very little edge over the other LZMA-based compressors like lzip and 7zip.

- Some of the old guys like arj, zip, and lha all compress just about as well as the venerable gzip. That’s surprising to me. I thought they would trail by a good margin and that didn’t happen at all.

- LZOP (which is one of my anecdotal favorites) didn’t do well here. It’s clearly not optimized for size

- The whole bandpass is about 10%. In other words, there isn’t a huge difference between anyone in this category. However, at gargantuan file sizes you might get some more milage from the that 10%. The test file here is 231 megs.

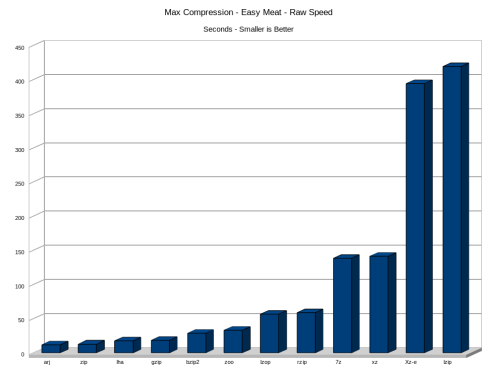

Okay let’s move on while staying in the maximum compression category. How about raw speed? Also, who got the best compressor throughput. In other words, if you take the total savings and divide by the time it took to do compression who seems to compress “best over time”. Here’s a couple more graphs.

Wow, I really was surprised by these results! I though for sure that the tried and true gzip would would take all comers in the throughput category. That is not at all what we see here. In fact, gzip was lackluster in this entire category. The real winner here was surprisingly the 90’s favorites arj and lha with an honorable mention to zip. Another one to examine here is bzip2. It’s relatively slow compared to arj and zip, but considering the compression it delivers, there is still some value there. Lastly, we can see that the LZMA crowd is sucking wind hard when it comes to speed.

Maximum Compression on Easy Meat Conclusion

Well, as with many things, it depends on what your needs are. Here’s how things break down

- The LZMA (with a slight edge to xz) have the best compression.

- Arj & lha strikes a great balance. I just wish it didn’t have such DOS-like syntax and worked with stdin/stdout.

- Gzip does a good job in terms of performance, but is lackluster on the “stupendous compression” front.

- The “extreme” mode for xz probably isn’t worth it.

Normal Compression – Easy Meat

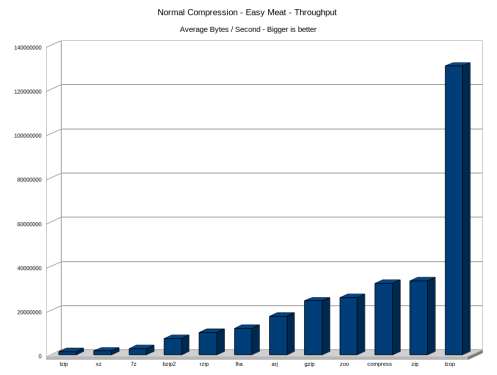

Well, maximum compression is all fine and well, but let’s examine what the archivers do in their default modes. This is generally the place the authors put the most effort. Again we’ll use a 231MB file full of easily compressed data. Let’s first examine the graphs. First up, let’s just see the compression sizes:

Well, nobody really runs away with here. The bandpass is about 75-91%. Yawn. A mere 16% span between zoo and xz? Yes, that’s right. Maybe later when we look at other file types we can see a better spread. Let’s move on and see how well the throughput numbers compare.

Well, this is interesting! Here’s were my personal favorite, Lzop is running away with the show! In it’s default mode, lzop gets triple the performance of the closest neighbor. The spread between the top and bottom of the raw speed category for normal compression is a massive 158 seconds. It’s important to note that the default compression level to lzop is -3 not -1. So, it should be able to do better still. Yeah lzop! I guess I should also point out that down in the lower ranked, gzip kept up with the competition and considering it’s good compression ratio, it’s a solid contender in the “normal mode” category.

Light Mode – Because My Time Matters

If you have a lot of files to compress in a hurry, it’s time to look at the various “light” compression options out there. The question I had when I started was “will they get anywhere near the compression of normal mode”. Well, let’s have a look-see, shall we?

Well, looks like there is nothing new to see here. LZMA gets the best compression followed by the Huffman crowd. LZOP trails again in the overall compression ratio. However, it’s important to note that there is only a 9% difference in what it did in 1.47 seconds while it took lzip a staggering 40.8 seconds. There was a 7% difference between LZOP and Bzip2, but there was a 25 second difference in their times. It might not matter to some folks how long it takes, but it usually does for me since I’m personally waiting on that archive to finish or that compressor script to get things over with.

Okay, maybe LZOP’s crushing the competition was because it defaults to something closer to everyone’s “light” mode (typically a “-1” flag to Unix-style compressors but the mileage varies for DOS-style). There is one way to find out:

Um. No. Lzop still trounces everyone soundly and goes home to bed while the others are still working. Also, keep in mind that the throughput number only measures compressed bytes not the total raw size of the file. If we went with raw bytes, the results would be even more dramatic, but less meaningful. Now if we could get it in a BSD licensed version with a -9 that beats xz. Then it could conquer the compression world in a hurry! As it stands, there is no better choice, or even anything close, to it’s raw throughput power.

Next up – Binary compression tests

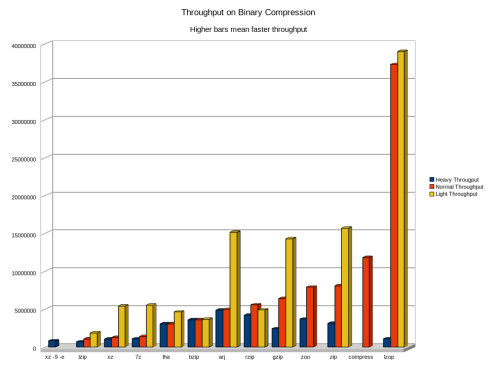

Okay, now that we tried some easy stuff, let’s move on to something slightly more difficult and real world. Binary executables are definitely something we IT folks compress a lot. Almost all software distributions come compressed. As a programmer, I want to know I’m delivering a tight package and smaller means good things to the end user in most contexts. It’s certainly going to help constrain bandwidth costs on the net which is of even more importance for free software projects that don’t have much cash in the first place. I chose to use the contents of all binaries in my Pkgsrc (read “ports” for non-NetBSD users). That was 231 megs of nothing but binaries. Here’s the compression and throughput graphs. You might need to click to get a larger view.

Well clearly the LZMA compression tools are the best at compressing binary files. They consistently do better at light, normal, and heavy levels. The Huffman and Limpel-Ziv based compressors trail by around 10-20% and then there are a few real outliers like zoo and compress.

Well, after the last bashing LZOP handed out in the Easy Meat category, this comes as no big surprise. It’s interesting to note that LZOP doesn’t have any edge in -9 (heavy) mode. I’d conclude that you should just use xz if you want heavy compression. However, in normal and light modes, LZOP trashes everyone and manages to get at least fair levels of compression while doing it. To cut 231MB in half in under 3.5 seconds on a modest system like the reference system is no small feat.

Compression Edge Cases

What about when a compression tool goes up against something like encrypted data that simply cannot be compressed? Well, then you just want to waste as little time trying as possible. If you think that this is going to be the case for you, ARJ and LZOP win hands down with ZIP trailing a ways back in third place. I tried a 100MB encrypted file and nobody came close to those two in normal mode. I also tried using AVI and MP3 files and the results were the same. No compression, but some waste more time trying than others.

Compression Fuzzy Features

There is certainly a lot more to a compression tool than it’s ability to compress or it’s speed. A well devised and convenient structure and a nice API also help. I think this is one reason that gzip has been around for so long. It provides decent compression, has been around a long time, and has a very nice CLI interface and API (libz). I also believe that tools that don’t violate the “rule of least surprise” should get some cred. The best example of this is gzip because it pretty much sets the standard for others to follow (working with stdin/stdout, numbered “level” flags, etc..). However, gzip is really starting to show it’s age and considering the amount of software flying around the net, it’s wasting bandwidth. It’s certainly not wasting much from the perspective of the total available out there on the net (most of which goes for video and torrents statistically anyhow). However, if you are the guy having to pay the bandwidth bill, then it’s time to look at xz or 7zip. My opinion is that 7z provides a most DOS/Windows centric approach and xz is the best for Unix-variants. I also love the speed of LZOP and congrats to the authors for a speed demon of a tool. If your goal is to quickly get good compression, look no further than LZOP.

You might need some other features like built in parity archiving or the ability to create self-extracting archives. These are typically things you are going to find in the more commercial tools. Pkzip and RAR have features like these.However, you can get to the same place by using tools such as the PAR parity archiver.

There are also tools that allow you to perform in-place compression of executable files. They allow the executable to sit around in compressed form then dynamically uncompress and run when called. UPX is a great example of this and I believe some of the same folks involved with UPX wrote LZOP.

More Interesting Compression Links

There sure are a lot of horrible reviews when you do a Google search for file compression reviews. Most of them are Windows centric and try to be “fair” in some arbitrary way by comparing all the fuzzy features and counting compression and speed as just two of about 20 separate and important features. Maybe for some folks that’s true. However, in my opinion, those two features are the key features and everything else must needs play a second fiddle. Sure it might be nice to have encryption and other features in your archiver, but are you too lazy to install GPG. I take seriously the Unix philosophy of writing a small utility that does it’s primary job extremely well. That said, there are some good resources out there.

- Wikipedia has a great writeup with info about the various archivers out there in it’s comparison of file archivers.

- Here’s a site which focuses more on the parallel compression tools and has a really great data set for compression which is much more comprehensive than what I did. It’s CompressionRatings.com

- Along the same lines as the last site is the Maximum Compression site. They do some exhaustive compression tests and cover decompression, too. I didn’t focus on decompression because most utilities are quite fast at it and the comparisons are trivial.

- TechArp has good review of the stuff out in the Windows world. However, their site is bloated over with annoying flashing ads. If you can ignore those, then check it out.

Happy compressing!

In my tests, lzop’s levels 1 through 6 are virtually identical in both time and space, and levels 7 through 9 are virtually the same in space (but 8 and 9 are significantly slower). So only levels 1 and 7 are worth bothering with.

However, QuickLZ appears to be both significantly(!) faster and a better compressor than LZO, at least per Eli Collins’ benchmark posted on one of the Hadoop bugs: https://issues.apache.org/jira/browse/HADOOP-6349 . He also found FastLZ level 2 to be comparable to LZO’s default, while LZF’s “VF” mode (apparently) is roughly comparable to FastLZ’s level 1 (but slightly slower). Upshot is that QuickLZ (also GPL) appears to be the king, and FastLZ edges out LZF on the permissive side.

Note that gzip 1.4.0 is the current version, released in January; I don’t know whether its performance has changed. zlib is where the main performance work in deflate-land is happening; you might try its minigzip. There are lots of options to tweak things with assembler code, too; I think the default is still fully C-based, but I’m not sure. gzip, Zip and zlib are identical in terms of algorithm–only headers/footers differ. (xz and 7-Zip’s LZMA and LZMA2 are also identical. xz is based on liblzma, a rewrite of parts of 7-Zip’s LZMA SDK.)

On the high end, you might want to look at Matt Mahoney’s zpipe/ZPAQ code. In my tests it wasn’t much good for anything but benchmarks (i.e., two ORDERS OF MAGNITUDE slower than xz’s slowest), but it did manage to eke out an extra couple of percentage points on compression ratio.

Finally, the QuickLZ site has some links to other large benchmark sites. Some of the things they test may be of interest, too. (Or not.)

Great write-up. I love the graphs. I am definitely part of the Huffman crowd, the Ray Huffman crowd that is!

There are others things to consider.

– time : i use pigz to compress with n threads ( so time is near to t/n )

– search : unix tools like gz,bz ( grep -J is so cool ).

Hi,

Next time you run Benchmarks, feel free to email me first at matthew@phoronix.com. There’s lots of interesting things that we can help you with on setting the benchmarking up more efficiently.

Matthew

[…] It compresses the kernel image using a different algorithm. gzip is the standard because it unzips very fast and provides decent compression. lzma and xz provide maximum compression, not recommended if you have a slow computer. lzop is supposedly faster than gzip… http://www.linuxjournal.com/node/8051/print https://aliver.wordpress.com/2010/06/…of-datagraphs/ […]

What are the differences between the Gzip, Lzma, Xz and Lzop compression said this on August 29, 2011 at 6:42 am |

Very useful post – thank you.

I linked to it at my blog:

http://blog.oxplot.com/2011/09/huge-unix-file-compresser-shootout-with.html

[…] seems like a lot of work. Why use LZO? It turns out LZO is much faster than competing compression algorithms. In fact, LZO is fast to the point that it can speed up some Hadoop tasks by reducing the amount of […]

LZO Compression in Hadoop | Bean Water said this on October 10, 2011 at 11:01 pm |

That’s awesome. I love lzo. Doesn’t surprise me a bit that you can trade some CPU overhead and get some I/O speed in the trade.

Markus, I wrote you e-mail, have you seen it?

Nice post. I was checking constantly this blog and I am impressed!

Very useful info specially the last part 🙂

I care for such info much. I was looking for this

certain information for a long time. Thank you and best of luck.

I needed to thank you for this good read!! I definitely enjoyed

every bit of it. I’ve got you book marked to look at new things you post…

I’m compressing some binaries with lzop and I wonder how much it matters whether the input files are sorted by filetype or other similarities, to enhance duplicate detection; I know that matters quite a lot for bzip2, which is not nearly as smart as 7z.

[…] looking for benchmarks, I’ve found this one (old but good), which highly praises lzop compressor. Apparently, lzop is noticeably faster than […]

Compressors galore: pbzip2, lbzip2, plzip, xz, and lrzip tested on a FASTQ file » Autarchy of the Private Cave said this on March 30, 2015 at 5:38 pm |